Feature Lifecycle Stages—the "4 Rs"

Feature Lifecycle Analysis uses a simple four-stage model of the life of a product feature.

- Reveal

- Introducing and releasing new functionality.

- Refine

- Improving or enhancing an existing feature. It is how we make good features great.

- Revise

- Bug fixing, refactoring, updating libraries. All the work we need to keep a feature alive and performing without necessarily altering the core functionality.

- Retire

- Removing features that have become obsolete or redundant.

What is Feature Lifecycle Analysis?

Feature Lifecycle Analysis is a technique for agile software teams (developers and product owners) working on products that are expected to live long and prosper. It helps balance priorities and identify systemic issues that may not be evident on the usual sprint planning timescale.

It shows how much effort is allocated to the major stages of the feature lifecycle: from initial release to ultimate retirement.

Feature Lifecycle Analysis doesn't provide direct answers. It is a diagnostic providing a fresh perspective on a project, and the basis for helping a team reflect on symptoms, underlying problems and hopefully solutions.

It can be done on paper, a whiteboard, or a spreadsheet. If you use PivotalTracker, then your project can be analysed automatically here.

A Question of Balance

If we are using agile software development process such as Scrum, then isn't Feature Lifecycle Analysis redundant?

In an ideal world with a well-balanced and high-performing team, we should be able to find our own optimal balance by trusting the process:

- customer/business requirements rule, and are represented in the team through a product owner or even an onsite customer

- we prioritise work for each iteration as a team, balancing customer/business requirements with other things that the team knows are important in order to meet customer expectations (like performance)

- we keep our iterations short and continuously deliver working software, so even if we get things wrong we can course-correct in short order

That is of course the ideal. But we operate in the real world, and many things can upset the balance of our projects leading to less-than-ideal outcomes. For example:

- Urgency Trumps Impact

- It's easy to find a queue of people ready to argue for the latest urgent requirement, but there's no-one around to champion the more impactful stuff that hasn't also been called out as urgent. Result? Six months later, we've delivered all the urgent things, but 90% of the potential impact ("value") is still on the table.

- The New Shiny

- The new shiny trumps last week's idea. This can be a problem in startups where the founder/ideas person is also the product owner. Excellent at generating new ideas, but no time or ability to follow-through. So the team leaves a trail of half-baked implementations but never seems to achieve anything great.

- Uninspired Leadership

- The team is looking for insight and vision to drive priorities but just hears "meh". So the results are naturally also meh.

- Unreal Priorities

- A product owner who is (consciously or not) skewing priorities towards a certain theme or faction over all others, like one particularly vocal customer. Or perhaps lacking real customer insight, priorities are out of touch with reality.

- Weak Team

- A weak development team that's not able to put up a convincing case for something they believe is important.

- Domineering Team

- Conversely, a development team that is too strong and always manages to spin the product owner to their way of thinking.

- Group Think

- Although we use a strict planning game to prioritize stories, the team is suffering from "group think" and priorities end up skewed to one point of view.

- ScrumMaster At Sea

- A scrum master that doesn't recognise there's an issue of balance until it is too late, or is running out of tricks to help the team fix it.

Feature Lifecycle Analysis is really just a diagnostic that can present in a picture what may otherwise just be a gut-feeling that something is not quite right.

New Project (14 Sprints)

This is a real project that kicked-off a few months ago with a small new team.

Reflections on the analysis:

- The peak in refinement and revision looks like payback for rushing new features and not paying sufficient attention to code quality. (in fact this is true - but the refactoring effort and team-leveling in June will hopefully prevent this from repeating)

- We hope/expect to see a continuation of new features appearing, with slightly more effort in refinement, but a drop-off of revision.

- It is too early to expect features retiring, but some should start coming through as early product ideas prove "unuseful" in practice

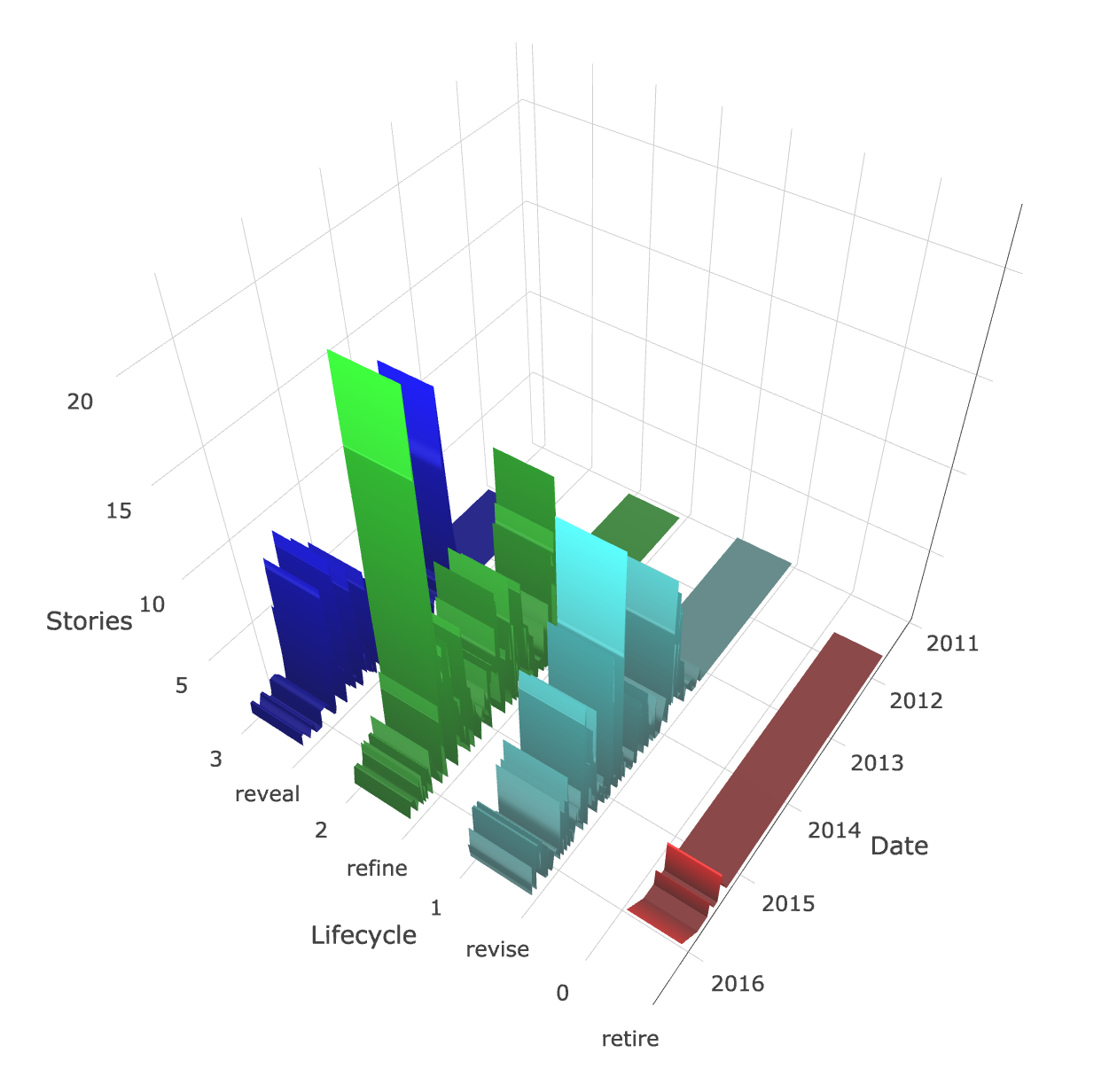

Mature Project (5 years)

This project started in Dec-2010 and went live in Apr-2011. It is still actively maintained. We only started doing 4Rs tagging in 2013.

Reflections on the analysis:

- This is what I'd call a healthy balance for a long-lived project that remains actively maintained.

- There's at least as much effort going into refinement as revisions. This is good, as it reflects an effort to hone and polish features rather than just maintining the status quo.

- There's a periodic focus on new feature development, with active maintenance and improvement between times. This is what you'd expect in an "old" project: there isn't the ROI for continual new feature development, but neither is it being left to fester and be locked into a feature set 5 years out of date.

- There's a willingness to deprecate obsolete features. If these weren't removed, they would stay in the application and just add to the maintenance overhead.

How the Analysis Works

This is a pure Javascript client-side application. It collects story data directly from the Pivotal Tracker API for analysis and charting in the browser.

Requirements for a "Good" Analysis

- at least a few weeks of project data (stories are aggregated by week)

- a good number of stories with labels matching the 4 lifecycle stages

- adjust the story label matching fields to correspond to the labels used in your project

Security

The analysis uses your API key (get it from your PT Profile page). The API key is used only to communicate securely from your browser to the PivotalTracker API. It is not stored in cookies or sent to any other destination. Since this is an open-source project, you can check this.